Section2.3Line Graphs and Covers

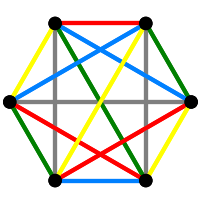

Let \(D\) be the incidence matrix of an orientation of the graph \(X\). Then\[DD^T = \De -A,\quad D^TD = 2I +S\] where \(\De\) is the diagonal matrix of valencies of \(X\) and \(S\) is a symmetric matrix with zero diagonal and off-diagonal entries from \(\{0,1,-1\}\). In other words, \(S\) is a signed adjacency matrix. The entries of \(S\) are indexed by \(E(X)\), and the \(ab\)-entry is non-zero if and only if the edges \(a\) and \(b\) are adjacent in the line graph \(L(X)\) of \(X\), so we have a signed adjacency matrix for \(L(X)\).

A signed ajacency matrix \(T\) of a graph \(Y\) determines a two-fold cover \(Z\) of \(Y\), as follows. The vertex set of the cover is \[V(Y) \times \{0,1\}\] and \((u,i)\) and \((v,j)\) are adjacent if and only if \(u\sim v\) and either \[i=j,\quad T_{u,v}=1\] or \[i\ne j,\quad T_{u,v}=-1.\] It is easy to check that the map that sends \((u,i)\) to \((u,1-i)\) is an automorphism of \(Z\). In matrix terms, we get the adjacency matrix of \(Z\) by applying the following substitutions to the entries of \(A(Y)\): \[0\to\pmat{0&0\\0&0},\qquad 1\to\pmat{1&0\\0&1} \qquad -1\to\pmat{0&1\\1&0}.\]

The pairs \[\{(u,0),(u,1)\}\] are called the fibres of the cover, you might verify that these form an equitable partition of the cover, and that the quotient over the fibres is \(Y\). Thus we see that the characteristic polynomial of the cover is divisible by the characteristic polynomial of the graph.

Lemma2.3.1

\[\phi(Z,t) =\phi(T,t)\phi(Y,t).\]

(Outline.) Let \(H\) be the matrix \[\frac{1}{\sqrt{2}} \pmat{1&1\\1&-1}\] and let \(K\) be the block-diagonal matrix formed using \(|V(Y)|\) copies of \(H\). If \(M\) is a complex matrix, let \(|M|\) denote the matrix we get by replacing each entry of \(M\) by its absolute value. Then \(K\) is orthogonal and symmetric and \(KA(Z)K\) is permutation equivalent to \[\pmat{S&0\\0&|S|}.\]

Here \(|S|=A(Y)\). We offer another view of this result. If \(A\) and \(B\) are symmetric \(01\)-matrices such that \(A\circ B=0\), then \(A-B\) is a signed adjacency matrix and \[ \pmat{A&B\\B&A}\] is the adjacency matrix of the corresponding cover. We can now use the following: \[\frac12\pmat{I&I\\I&-I}\pmat{A&B\\B&A}\pmat{I&I\\I&-I} =\pmat{A+B&0\\0&A-B}.\]

This leads us to an easy construction of the adjacency matrix of the cover.

Now we turn to our line graphs. The cover can be constructed as follows. Choose one arc \((u,v)\) for each edge \(\{u,v\}\) of \(X\). (This defines an orientation of the graph.) Our matrix \(S\) is a signing of \(A(L(X))\)—its rows and columns are indexed by our chosen arcs, and the entry corresponding to a pair of distinct overlapping arcs is 1 if they have the same head or tail, and \(-1\) otherwise. Rather than represent the vertices of the cover by pairs \(((u,v),i)\), we proceed thus: if \((u,v)\) is one of our chosen arcs then we use \((u,v)\) to denote \(((u,v),0)\) and \((v,u)\) for \(((u,v),1)\). The following procedure implements this.

We test that our two constructions agree on the Petersen graph, and see that cover is isomorphic to the line graph of the direct product of the Petersen graph with \(K_2\). (This product is itself a cover of the Petersen graph.)